Building Your MLOps Career: Essential Open-Source Tools to Master for Interviews

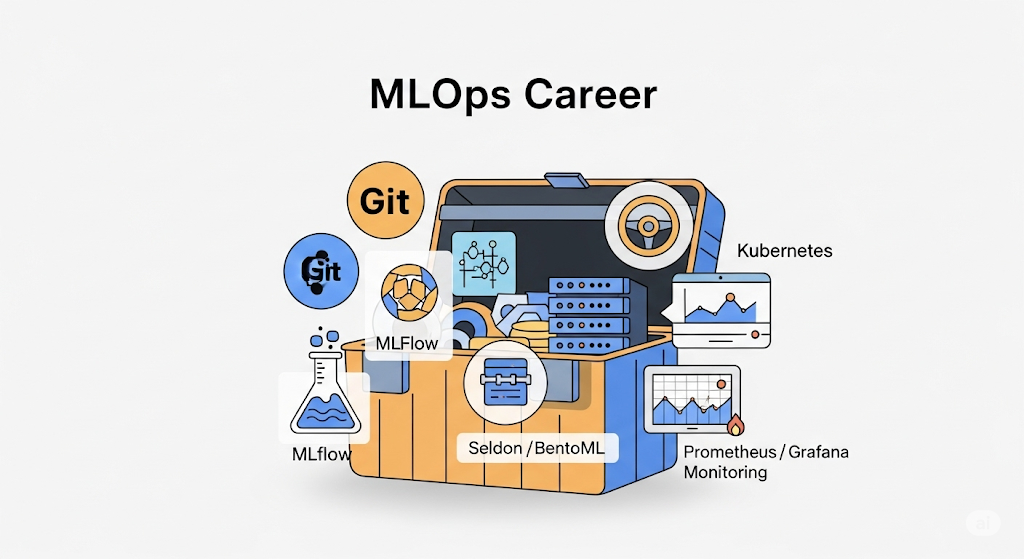

I. Introduction: Why MLOps Skills Can Make or Break Your Interview 🎯

In today’s competitive AI job market, employers aren’t just looking for candidates who can train a high-performing model—they want professionals who can design, deploy, monitor, and maintain that model in production. This is where understanding the full MLOps lifecycle becomes a career-defining advantage.

Recruiters and hiring managers now expect candidates to be fluent in both machine learning algorithms and the operational workflows that support them. That means you’ll need to confidently discuss topics like pipeline orchestration, model versioning, deployment strategies, and monitoring systems. In an interview setting, this knowledge doesn’t just help you answer technical questions—it also provides richer examples for behavioral interview prompts like “Tell me about a time you handled model degradation in production”.

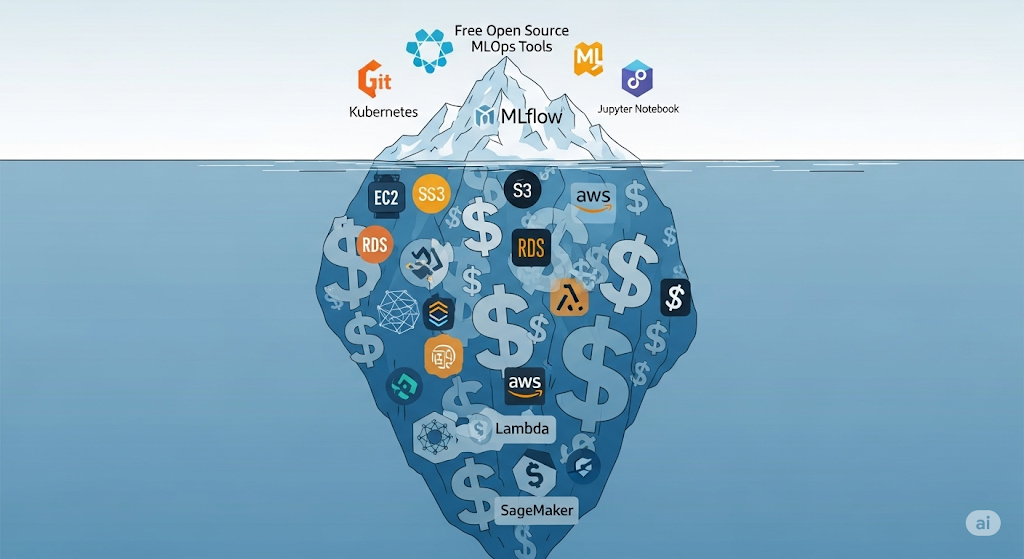

By knowing the right tools—whether it’s SageMaker vs open source solutions like MLflow, Airflow, or Seldon Core—you position yourself as someone who can hit the ground running from day one. This article is part of our comprehensive Ultimate Guide to Cost-Effective Open-Source MLOps in 2025, which guides on selecting, implementing, and optimizing MLOps tools for both startups and enterprise environments.

As Google Cloud’s MLOps Guidelines point out, mastering these workflows bridges the gap between experimentation and production, ensuring that machine learning initiatives deliver measurable business value—not just promising prototypes.

💡 Pro Tip: For a structured path to mastering these skills, consider the AWS Certified Machine Learning Specialty – Udemy course, a comprehensive option that covers both cloud-native and open-source MLOps workflows.

II. Common MLOps Interview Question Categories 📋

When preparing for interviews that involve SageMaker vs open source discussions, it’s not enough to just memorize definitions—you need to understand the application of tools and workflows. Most MLOps interview questions fall into four broad categories, each testing a different part of your technical and strategic thinking.

1. System Design & Architecture Questions 🏗️

Expect prompts like “Design an end-to-end MLOps pipeline for a real-time recommendation system”. These questions assess your ability to think at scale, balancing cost, performance, and maintainability. You’ll often need to compare trade-offs between a fully managed service such as AWS SageMaker and a self-hosted Kubernetes-based setup. Interviewers want to see if you can address high availability, disaster recovery, and horizontal scaling in your designs.

2. Data Versioning & Experiment Tracking Questions 📂

Hiring managers might ask how you’d ensure reproducibility in an ML project with multiple experiments and team members. This is where tools like MLflow, Weights & Biases (W&B), or Neptune come into play. Being able to explain when to choose an open-source tracker vs a managed solution is crucial. For a deeper dive, see our related cluster, Lean Experiment Tracking: MLflow vs. W&B vs. Neptune.

3. Deployment & Model Serving Questions 🚀

Many interviews include a scenario like “How would you deploy a model that needs sub-100ms inference latency?”. This is your chance to compare SageMaker endpoints to open-source serving frameworks like Seldon Core or BentoML. Discussing the implications of each—such as cold start latency, scaling policies, and CI/CD integration—demonstrates practical deployment experience.

4. Monitoring & Retraining Loop Questions 🔄

An often-overlooked interview area is monitoring. Employers want to know if you can design a feedback loop that triggers retraining when performance drops or begins to drift. You should be able to contrast SageMaker’s built-in model monitoring with open-source stacks, such as Evidently AI, which utilizes Prometheus and Grafana. Knowledge of alert thresholds, retraining automation, and SLA compliance can set you apart.

💡 Pro Tip: For hands-on prep, platforms like Exponent offer curated MLOps interview practice problems that simulate real-world questions.

III. Tool #1 – MLflow: Versioning and Experiment Tracking Mastery 🧪

For anyone preparing to ace SageMaker vs. open-source interview questions, MLflow is a must-know open-source tool. Its popularity stems from being lightweight, flexible, and widely adopted in production MLOps pipelines. Recruiters and hiring managers often expect you to know how to leverage it for model registry, artifact tracking, and experiment reproducibility—especially if the company prefers an open-source-first approach over managed services.

What to Know for Interviews 🎯

When interviewers ask about model lifecycle management, MLflow’s Model Registry should be front and center in your answer. You should be able to explain how it enables version control for models, supports staging/production transitions, and integrates with CI/CD pipelines.

- Model Registry → Controls and tracks model versions with status transitions (e.g., Staging → Production).

- API Usage → Demonstrate how to log parameters, metrics, and artifacts using the Python API.

- Artifact Tracking → Explain storing datasets, model files, and plots to maintain reproducibility.

📌 Pro Tip: Managed services like AWS SageMaker offer built-in experiment tracking, but knowing MLflow gives you vendor-agnostic skills that work across any cloud or on-premise setup.

Short CLI Example to Impress Interviewers 💻

Here’s a minimal MLflow CLI snippet you can mention or even demo live:

# Start the MLflow tracking server locally

mlflow ui --backend-store-uri sqlite:///mlflow.db --default-artifact-root ./artifacts

# Log a run programmatically

mlflow run https://github.com/mlflow/mlflow-example -P alpha=0.5This demonstrates your understanding of both local development and remote deployment workflows.

Free Learning Resource 📚

You can deepen your expertise through the MLflow Official Documentation, which covers advanced integrations, deployment strategies, and multi-user setups.

💡 Affiliate Tip: If you want structured learning, the Machine Learning DevOps Nanodegree – Udacity includes a hands-on MLflow module aligned with real interview scenarios.

IV. Tool #2 – Apache Airflow: Orchestrating ML Pipelines 🔄

If you’re preparing for SageMaker vs open source interview questions, Apache Airflow will almost certainly be on the list. Many organizations—especially those running self-hosted MLOps stacks—use Airflow for workflow orchestration because it’s scalable, battle-tested, and integrates seamlessly with data engineering and machine learning pipelines.

Key Interview-Ready Skills 🎯

When recruiters ask about end-to-end ML workflow design, you should be ready to discuss these Airflow competencies:

- DAG Creation → Writing Directed Acyclic Graphs to define a sequence of ML tasks, such as data preprocessing, training, evaluation, and deployment.

- Scheduling → Configuring pipelines to run periodically or on specific triggers (e.g., retrain models weekly or when new data arrives).

- Data Dependency Handling → Ensuring downstream tasks only run after upstream jobs have completed, preventing corrupted outputs.

📌 While AWS SageMaker Pipelines offers managed orchestration, knowing Airflow allows you to work in multi-cloud or on-premise setups, giving you flexibility and control.

Example DAG Snippet for a Simple ML Training Pipeline 💻

Here’s a minimal DAG that’s often used as an interview talking point:

from airflow import DAG

from airflow.operators.python import PythonOperator

from datetime import datetime

def train_model():

print("Training ML model...")

with DAG('ml_training_pipeline', start_date=datetime(2025, 1, 1), schedule_interval='@daily', catchup=False) as dag:

train_task = PythonOperator(

task_id='train_model',

python_callable=train_model

)This demonstrates that you can handle pipeline definition in code, a core MLOps skill.

Further Reading 🔗

For a detailed comparison of Airflow with other orchestration tools like Prefect and Dagster, check our in-depth guide Workflow Orchestration: Airflow vs Prefect vs Dagster—a must-read if you want to defend your tool choices in an interview.

💡 Pro Tip: If you’re targeting cloud-native orchestration, pair Airflow with Kubernetes Executors to run pipelines elastically. This hybrid approach combines the scalability of managed clouds, such as SageMaker, with the control of open-source solutions.

V. Tool #3 – Seldon Core: Model Serving at Scale 🚀

When discussing SageMaker vs. open source in interviews, one tool that often comes up in the open-source category is Seldon Core. This Kubernetes-native framework enables scalable, production-grade model serving and integrates well into both cloud-native and on-premises MLOps stacks.

How to Talk About REST vs gRPC Serving in Interviews 🎯

Interviewers may test your understanding of model serving protocols.

- REST (Representational State Transfer) is simple, widely supported, and easy to debug—making it ideal for interoperability with non-ML systems.

- gRPC (Google Remote Procedure Call) provides faster, binary communication with lower latency, making it a more suitable choice for real-time inference at scale.

In an interview, you can frame this choice as latency versus compatibility, highlighting that Seldon Core supports both, providing organizations with flexibility based on their workload needs.

📌 In contrast, AWS SageMaker Endpoints also offer scalable model hosting but tie you to AWS infrastructure. Seldon’s open-source nature provides portability, which is valuable for multi-cloud or hybrid strategies.

Example YAML Deployment Spec 📄

Here’s a minimal example of deploying a model in Seldon Core using REST serving:

apiVersion: machinelearning.seldon.io/v1

kind: SeldonDeployment

metadata:

name: my-model

spec:

predictors:

- name: default

replicas: 2

graph:

name: classifier

implementation: SKLEARN_SERVER

modelUri: s3://mybucket/mymodel

endpoint:

type: RESTThis snippet is interview gold—it demonstrates your understanding of Kubernetes CRDs (Custom Resource Definitions) and your ability to map ML models to scalable deployment infrastructure.

Further Reading 🔗

For a deep dive into when to use Seldon Core versus a more lightweight serving framework, check out our comparison guide, ‘Deploying Models: Seldon Core vs BentoML‘. This will help you justify tool choices when asked in a system design interview.

💡 Pro Tip: Mention Seldon Core’s A/B testing and canary release capabilities to stand out in interviews—they demonstrate an understanding of safe model rollouts in production, which hiring managers love to hear.

VI. Bonus Tool – Evidently AI: Monitoring and Drift Detection 📊

In SageMaker vs. open source discussions, one key differentiator interviewers look for is whether you understand model monitoring, and Evidently AI is one of the most popular open-source tools for this purpose. Unlike training and deployment, monitoring is continuous and directly impacts business outcomes by detecting data drift and concept drift before they degrade performance.

Why Monitoring Experience Is a Differentiator in Interviews 🎯

Many candidates can describe how to train and deploy a model, but far fewer can explain how to maintain its health over time. In an MLOps interview, mentioning Evidently AI:

- Shows awareness of post-deployment model lifecycle management.

- Demonstrates familiarity with metrics and statistical tests for drift detection.

- Positions you as someone who can proactively prevent costly downtime.

In contrast, AWS SageMaker Model Monitor provides a fully managed solution for detecting data drift—but with vendor lock-in. Evidently AI, being open source, is portable and integrates with multiple stacks, making it attractive for multi-cloud or hybrid strategies.

Example Drift Detection Report Snippet 🧪

Here’s a minimal Python example that could impress an interviewer:

from evidently.report import Report

from evidently.metric_preset import DataDriftPreset

report = Report(metrics=[DataDriftPreset()])

report.run(reference_data=ref_df, current_data=curr_df)

report.save_html("drift_report.html")This snippet shows you know how to compare historical (reference) data with current production data, generate a visual drift report, and store it for dashboard integration.

Further Reading 🔗

For a complete step-by-step tutorial on integrating Evidently AI with Prometheus and Grafana for real-time alerts, check our guide Free & Open-Source Model Monitoring: Evidently AI with Prometheus & Grafana. This will help you answer follow-up interview questions about end-to-end monitoring pipelines.

💡 Pro Tip: In interviews, pair this with a discussion of automated retraining triggers when drift exceeds a set threshold. This shows you can connect monitoring to action, a core skill for production MLOps.

VII. Mock MLOps Interview Questions and How to Answer Them 🎤

When preparing for technical interviews, especially those involving comparisons between SageMaker and open-source solutions, it’s not enough to know the tools—you need to articulate trade-offs, demonstrate system design skills, and clearly explain operational strategies. Below are three high-value mock interview questions, along with guidance on how to effectively answer them.

1. “How would you design an end-to-end ML pipeline using open-source tools?” 🔄

Sample Approach:

You could mention Apache Airflow for orchestration, MLflow for experiment tracking, Seldon Core for model serving, and Evidently AI for monitoring. Highlight how these integrate into an MLOps pipeline:

- Data ingestion → Airflow DAG triggers ETL jobs.

- Model training → MLflow logs experiments, artifacts, and metrics.

- Deployment → Seldon Core handles REST/gRPC serving on Kubernetes.

- Monitoring → Evidently AI detects drift and triggers retraining jobs.

Diagram Suggestion:

Visualize this with a flowchart from the data source to the retraining loop, showing where each tool fits.

Related: For a deeper breakdown of open-source deployment, refer to ‘Deploying Models: Seldon Core vs. BentoML‘.

2. “What are the trade-offs between Seldon Core and SageMaker?” ⚖️

Cost & Performance Breakdown:

- Seldon Core (Open Source) → No license fees, but you manage Kubernetes infrastructure, updates, and scaling. Suitable for predictable, high-volume workloads.

- SageMaker (Managed) → Pay-per-use, less DevOps overhead, integrated features like SageMaker Model Monitor and SageMaker Autopilot, but can become costly for sustained heavy usage.

You can back up your answer with real-world numbers from AWS SageMaker Pricing.

3. “How would you detect data drift in a deployed model?” 📊

Monitoring Stack Explanation:

Outline how to combine:

- Evidently AI for statistical drift detection reports.

- Prometheus to collect metrics from the drift service.

- Grafana to visualize trends and set alerts.

You could even drop a short code snippet for Evidently AI drift detection, similar to the one in our Free & Open-Source Model Monitoring guide, to demonstrate hands-on expertise.

💡 Pro Tip for Interviews: Always connect your answer to the business impact—how monitoring prevents revenue loss, maintains user trust, and informs retraining decisions.

VIII. Recommended Learning Path to Ace Your Interview 🎓

If you want to stand out in a competitive MLOps interview—whether the topic leans toward SageMaker vs open source, system design, or pipeline orchestration—you need a structured learning plan that balances hands-on skills, theory, and interview strategy. Below is a curated selection of affiliate and free resources to help you prepare for an interview.

1. Udacity – Machine Learning DevOps Engineer Nanodegree 🛠️

This program is a powerhouse for mastering the core tools interviewers love to ask about:

- MLflow for experiment tracking and model registry.

- Apache Airflow for orchestration.

- Kubernetes for scaling ML workloads.

By the end, you’ll be able to design a production-ready ML pipeline from scratch and confidently discuss trade-offs in an interview setting.

📌 Enroll here: Machine Learning DevOps Engineer Nanodegree – Udacity

2. Exponent – MLOps & System Design Interview Prep 🎤

If your main challenge is interview performance, not just technical skills, Exponent is a significant investment.

- Practice mock MLOps interview questions.

- Learn how to answer build vs buy scenarios (e.g., SageMaker vs open source).

- Get insider tips on whiteboard diagramming for architecture questions.

Check it out here: MLOps & System Design Interview Prep – Exponent.

3. Free: Kubernetes Basics on Kubernetes.io 🚢

For self-hosted MLOps discussions, Kubernetes is often a topic of discussion. The official docs are perfect for learning:

- How pods, services, and deployments work.

- How to deploy and scale workloads.

- How to manage stateful workloads, such as model servers.

Start learning here: Kubernetes Basics – Official Docs

💡 Pro Tip: Combining these resources ensures you can speak confidently about both managed platforms like SageMaker and open-source stacks, making you a stronger candidate for both startup and enterprise roles.

IX. FAQ 🔍

To make this section voice-search friendly, each answer is short, direct, and conversational—perfect for both human readers and Google Assistant/Alexa indexing. These FAQs also subtly reinforce the SageMaker vs. open-source theme by showing how different tools fit into interview preparation.

Q1: What are the most critical open-source MLOps tools to learn for interviews?

If you’re preparing for an MLOps interview, start with MLflow for experiment tracking, Apache Airflow for orchestration, and Seldon Core for model serving. These three cover the majority of system design and deployment questions you’ll face. You should also be familiar with monitoring tools like Evidently AI and Prometheus, as many companies now expect you to manage post-deployment model health.

📌 For hands-on learning, see the MLflow Official Docs and our guide on Deploying Models: Seldon Core vs BentoML.

Q2: How do I prepare for MLOps system design interviews?

The key is to practice designing end-to-end pipelines. Break down the process into:

- Data ingestion (e.g., Kafka, AWS Kinesis)

- Processing & training (e.g., Spark, SageMaker)

- Model serving (Seldon Core, SageMaker Endpoints)

- Monitoring & retraining (Evidently AI, Prometheus, Grafana)

Use diagramming tools like Lucidchart or Miro to sketch architectures. This will help you explain trade-offs, such as when to choose SageMaker vs open source for specific components.

📌 Check Google Cloud’s MLOps Guidelines for an authoritative blueprint.

Q3: Can I use free tools to prepare for MLOps interviews?

Absolutely ✅. Many of the top tools you’ll be asked about are open source and completely free to run locally:

- MLflow for tracking

- Airflow for orchestration

- Evidently AI for monitoring.

- Kubernetes Minikube for container orchestration

While you can practice entirely with free tools, pairing them with cloud trials (such as AWS Free Tier) provides exposure to managed services like SageMaker, helping you answer real-world cost and scalability questions.

📌 Explore AWS Free Tier for a no-cost starting point.

X. Conclusion & Next Steps 🧭

Mastering the right MLOps tools isn’t just about checking boxes on a job description—it’s about demonstrating real-world readiness. In today’s competitive hiring market, employers value candidates who can confidently discuss trade-offs like SageMaker vs. open source and back it up with hands-on experience. By understanding the cost, scalability, and operational implications of both approaches, you position yourself as a candidate who can make informed technical and business decisions.

Why This Knowledge Gives You a Competitive Edge

Many candidates can explain how a model works, but fewer can design a production-ready ML pipeline that’s cost-efficient, scalable, and monitored effectively. Familiarity with tools like MLflow, Apache Airflow, Seldon Core, and Evidently AI means you can answer both technical and behavioral interview questions with authority. This dual skill set is especially valuable for startups and mid-size companies where engineers often wear multiple hats.

Action Step: Build Your GitHub MLOps Portfolio

The fastest way to showcase your skills is to create a public GitHub repository containing pipeline demos.

- Include an end-to-end workflow: from data ingestion to model serving.

- Document both an open-source stack and a SageMaker-based workflow so you can compare them in interviews.

- Include architecture diagrams and YAML configurations to earn bonus points.

For inspiration, check out AWS’s MLOps on AWS Workshop, which provides practical, deployable examples.

Where to Go Next

If you’re ready to go deeper into optimizing your stack for budget-conscious deployments, read our related article:

📌 Building Cost-Effective MLOps Pipelines for Startups – a guide to balancing performance with cost efficiency, including cloud vs self-host trade-offs.By combining hands-on portfolio projects with strategic learning, you’ll not only ace your interviews but also prove you can make sound architecture and cost decisions—skills that hiring managers are actively looking for.